Every few months social media loses its collective mind over a new AI breakthrough. This week it was over DeepSeek with their just as good, but cheaper, and less resource intensive version of GenAI, exploding into the collective consciousness just days after the Trumpian $500bn announcement of Stargate.

Tech commentators have engaged in an arms race to declare which model is the best, which company is pulling ahead and which particular shade of doomsday or utopia we’re heading towards. Naturally, this made ideal fodder for a second Sidebar conversation.

The Real Story: Innovation from the Fringe

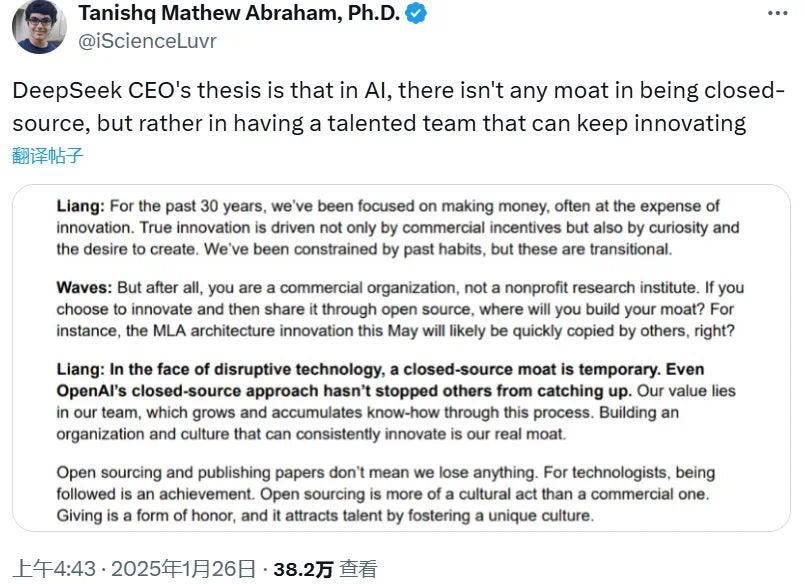

DeepSeek’s rise has been framed as a technological marvel, and it probably is, though it might miss a bigger point. What’s truly interesting isn’t just the model, but how the company structured itself to build it. DeepSeek’s CEO doesn’t claim that the tech, or even their decision to open-source it, is the real advantage. Their real strength is more in organisations culture and design through decentralisation. Encouraging employees with freedom to be curious, experiment and actually create.

This is the deeper lesson, for the development of AI tells us more about the bigger picture innovation than it does about technology that enables it.

Big incumbents would like to believe that their financial heft and ability to throw vast amounts of money at a problem gives them an unfair advantage to solve it. And they might be right. However, lots of money and resources tends to come with organisational and bureaucratic baggage. It typically slows you down, and muffles voices to boot.

Governments and tech giants are betting enormous sums on AI development, assuming the sheer scale of their resources guarantees superiority. This is why Stargate was such a big announcement. Yet DeepSeek, constrained by chip limitations, took a different route by rethinking how to approach the problem rather than brute forcing it. They focused on efficiency, adaptability, and leveraging constraints as a catalyst for creativity. Sometimes, a lack of resources forces different, more creative, more elegant thinking.

AI as a Tool, Not an Unaccountability Machine

Our approach to AI mirrors how we approach business challenges in general. Do we see things from the top down in a single best way, are we more open-minded and discerning about how we solve problems and enhance decision-making, or do we let it become an excuse to dodge responsibility? There’s a tendency to treat AI as an Unaccountability Machine, a catch-all excuse for leaders who want to deflect blame. Something goes wrong? Not our fault. The AI did it. If you haven’t read Dan Davies’ book on this, you really should.

AI’s most valuable role isn’t as an omniscient overlord handing down orders, it’s as a partner that enhances human intuition and insight. The best use cases are about augmenting human capability first, not replacing workers.

We must consider the downside of over optimisation too. AI, when applied too aggressively, can strangle spontaneity and creativity in the same way as a centralised, top down management system. If everything is optimised to the nth degree, don’t we risk eliminating the very randomness and adaptability that make systems resilient?

Most decision makers in business will tell you that data should guide, not decide. But when the data comes wrapped in a jovial chat interface it’s easy to forget. Rather than the goal being for AI to take every decision, it should improve human decisions by surfacing relevant data, highlighting blindspots, helping spot new options, and coaching the decision maker. There are some really nice examples of this in the real world just now too, including airports giving varied digital twin access to employees depending on their role, so they can act with better intuition according to events when they’re more informed from the data being processed in previously impossible ways.

AI can enable better decisions only in a culture that values decision-making and backs its employees to make decisions. In risk-averse organisations, AI becomes another excuse to avoid accountability, worsening the way work happens.

Reframing AI: A More Optimistic Path Forward

If AI’s development is a metaphor for broader innovation, the lesson should be that it’s not about the tech itself, but how we structure organisations, empower people and make decisions. There isn’t one best way forward, but more lots of options to apply based on the context. In the same way no single AI tool tends to be overarching, being able to use multiple, and integrate easily leads people to create a greater imagination of outcomes.

The companies (and societies) that will thrive in an AI-driven world aren’t necessarily those with the biggest models or the most compute power. As we’ve just seen demonstrated, they’ll be the ones that cultivate curiosity, intuition and creativity.

If a hedge fund spinout can challenge industry giants by decentralising authority and empowering its people, what could businesses in other industries learn from that approach? How could organisations rethink their structures, not just for AI, but for innovation as a whole? Is this bringing the likes of the innovation skunkworks back into fashion? Just with a whole heap of stronger compute behind it.

AI really isn’t the revolution, but the changing way we work is.

It brings to mind James C. Scott’s Seeing Like a State where he suggests top-down optimisation might maximise short-term efficiencies, but it often erodes long-term resilience. Longer term, winners will be those who foster adaptability, encourage human ingenuity, and recognise that AI amplifies (or destroys!) good thinking, but rarely originates it.

Share this post